Building a Live Coding Audio Playground

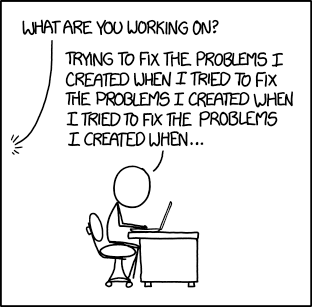

Two weeks at Recurse Center went by fast! I started out trying to build an Audio Units extension and — in a classic yak shave  Fixing Problems

Fixing Problems xkcd.com/1739/ — ended up building a live coding audio playground. (I have not yet finished the original project.)

If you just want to play around with the app: here it is fxplayground fxplayground is a live coding audio playground. fxplayground.pages.dev . If you want to hear how I made it, then keep reading!

My initial goal at RC was to build an Audio Units  Audio Units - Wikipedia

Audio Units - Wikipedia en.wikipedia.org/wiki/Audio_Units (AU) extension. AU is Apple’s audio plugin system, which works with apps like Logic and GarageBand. There are other plugin systems,1 but I use Logic when recording audio for myself and for my band

Existential Shred, by Baby Got Back Talk 5 track album

Existential Shred, by Baby Got Back Talk 5 track album  babygotbacktalk.bandcamp.com , so I wanted to try extending it.

babygotbacktalk.bandcamp.com , so I wanted to try extending it.

Apple actually has a tutorial on creating an AU extension  Creating an audio unit extension | Apple Developer Documentation Build an extension by using an Xcode template.

Creating an audio unit extension | Apple Developer Documentation Build an extension by using an Xcode template. developer.apple.com/documentation/avfaudio/audio_engine/audio_units/creating_an_audio_unit_extension , complete with a good starter template. Almost too good — it gives you a finished (albeit basic) AU extension, ready to compile and run. Great for getting up and running quickly, but bad for building a mental model of how it works.

I tend to learn by exploring, so I decided to try building a low-pass filter. And let me tell you: building an audio effect in Xcode is like pulling teeth.

I mean, okay. It’s not that bad. But I’m a disciple of the Bret Victor school of thought  Bret Victor - Inventing on Principle

Bret Victor - Inventing on Principle www.youtube.com/watch?v=PUv66718DII , where trying to create something without immediate feedback is like working without one of your senses. So I decided to build an environment in which I could get that immediate feedback!

The rough plan: let users upload audio, provide a text field in which to write their effect, and display a visualization of both the original audio signal (“dry”) and the audio signal modified by the user’s code (“wet”). A perfect fit for a small single-page app!

I built the app using Svelte  Svelte • Cybernetically enhanced web apps

Svelte • Cybernetically enhanced web apps ![]() svelte.dev/ . I know I wrote before about defaulting to React for new projects

svelte.dev/ . I know I wrote before about defaulting to React for new projects  No One Ever Got Fired for Choosing React | jakelazaroff.com If you spend a lot of time on Hacker News, it’s easy to get taken by the allure of building a project without a framework.

No One Ever Got Fired for Choosing React | jakelazaroff.com If you spend a lot of time on Hacker News, it’s easy to get taken by the allure of building a project without a framework. jakelazaroff.com/words/no-one-ever-got-fired-for-choosing-react/ , but what is even the point of being at RC if not to try new things? Plus, the design I had planned didn’t involve any rich widgets,2 so I wouldn’t need any component libraries — almost all the complexity was in the audio code.

Some more assorted thoughts about Svelte:

- Single-file components are such a pleasant authoring experience! I never want to build an app without them. Someone please bring them to React.

- I’m wary of two-way binding after years of working with Angular 1, but it’s really convenient to be able to just define functions within a component without the rigamarole of

useImperativeHandle. - No

useEffect, my most hated hook! Reactive blocks Statements • Svelte Tutorial Learn Svelte and SvelteKit with an interactive browser-based tutorial

Statements • Svelte Tutorial Learn Svelte and SvelteKit with an interactive browser-based tutorial  learn.svelte.dev/tutorial/reactive-statements are way less error-prone, although the lack of a “cleanup function” definitely made my life hard for a bit.

learn.svelte.dev/tutorial/reactive-statements are way less error-prone, although the lack of a “cleanup function” definitely made my life hard for a bit.

Ultimately, I really enjoyed Svelte! I’d still be hesitant to build a large app with it, but I’ll definitely be using it again for smaller side projects like this.

Anyway, back to the audio code: the Web Audio API  Web Audio API - Web APIs | MDN The Web Audio API provides a powerful and versatile system for controlling audio on the Web, allowing developers to choose audio sources, add effects to audio, create audio visualizations, apply spatial effects (such as panning) and much more.

Web Audio API - Web APIs | MDN The Web Audio API provides a powerful and versatile system for controlling audio on the Web, allowing developers to choose audio sources, add effects to audio, create audio visualizations, apply spatial effects (such as panning) and much more. ![]() developer.mozilla.org/en-US/docs/Web/API/Web_Audio_API is pretty incredible. It lets you construct a full audio routing graph, with oscillators and visualizers and all sorts of processing nodes. The secret sauce here is the

developer.mozilla.org/en-US/docs/Web/API/Web_Audio_API is pretty incredible. It lets you construct a full audio routing graph, with oscillators and visualizers and all sorts of processing nodes. The secret sauce here is the AudioWorklet  AudioWorklet - Web APIs | MDN The AudioWorklet interface of the Web Audio API is used to supply custom audio processing scripts that execute in a separate thread to provide very low latency audio processing.

AudioWorklet - Web APIs | MDN The AudioWorklet interface of the Web Audio API is used to supply custom audio processing scripts that execute in a separate thread to provide very low latency audio processing. ![]() developer.mozilla.org/en-US/docs/Web/API/AudioWorklet , which lets you run audio processing code off the main thread.

developer.mozilla.org/en-US/docs/Web/API/AudioWorklet , which lets you run audio processing code off the main thread.

An audio worklet has two parts: the AudioWorkletProcessor  AudioWorkletProcessor - Web APIs | MDN The AudioWorkletProcessor interface of the Web Audio API represents an audio processing code behind a custom AudioWorkletNode. It lives in the AudioWorkletGlobalScope and runs on the Web Audio rendering thread. In turn, an AudioWorkletNode based on it runs on the main thread.

AudioWorkletProcessor - Web APIs | MDN The AudioWorkletProcessor interface of the Web Audio API represents an audio processing code behind a custom AudioWorkletNode. It lives in the AudioWorkletGlobalScope and runs on the Web Audio rendering thread. In turn, an AudioWorkletNode based on it runs on the main thread. ![]() developer.mozilla.org/en-US/docs/Web/API/AudioWorkletProcessor , which is where you write your audio processing code, and an

developer.mozilla.org/en-US/docs/Web/API/AudioWorkletProcessor , which is where you write your audio processing code, and an AudioWorkletNode  AudioWorkletNode - Web APIs | MDN The AudioWorkletNode interface of the Web Audio API represents a base class for a user-defined AudioNode, which can be connected to an audio routing graph along with other nodes. It has an associated AudioWorkletProcessor, which does the actual audio processing in a Web Audio rendering thread.

AudioWorkletNode - Web APIs | MDN The AudioWorkletNode interface of the Web Audio API represents a base class for a user-defined AudioNode, which can be connected to an audio routing graph along with other nodes. It has an associated AudioWorkletProcessor, which does the actual audio processing in a Web Audio rendering thread. ![]() developer.mozilla.org/en-US/docs/Web/API/AudioWorkletNode , which is an object that exists in the main thread and gets connected to the rest of your audio routing graph. So basically, your UI code interacts with the

developer.mozilla.org/en-US/docs/Web/API/AudioWorkletNode , which is an object that exists in the main thread and gets connected to the rest of your audio routing graph. So basically, your UI code interacts with the AudioWorkletNode, but the AudioWorkletProcessor is doing the real work “behind the scenes”.

At a high level, here’s how the playground works:

- The user types code in the editor.3

- That code gets compiled into an

AudioWorkletProcessor. - The app creates a corresponding

AudioWorkletNodeand connects it to the audio routing graph.

Making an audio worklet should be fairly simple: create a separate JS file with a class extending AudioWorkletProcessor, create an AudioContext and call its addModule method with that file’s URL, then create an AudioWorkletNode with the name of that processor.4 That’s all well and good, but the JS file doesn’t actually exist — it’s based on user-supplied code. I had a hunch, though, that I could fake it with URL.createObjectURL  URL: createObjectURL() static method - Web APIs | MDN The URL.createObjectURL() static

method creates a string containing a URL representing the object

given in the parameter.

URL: createObjectURL() static method - Web APIs | MDN The URL.createObjectURL() static

method creates a string containing a URL representing the object

given in the parameter. ![]() developer.mozilla.org/en-US/docs/Web/API/URL/createObjectURL_static .

developer.mozilla.org/en-US/docs/Web/API/URL/createObjectURL_static .

That hunch turned out to be correct! If this app has a beating heart, it’s this compile function:

let n = 0n;

async function compile(ctx: AudioContext, code: string) {

// each AudioWorkletProcessor needs a unique name

const name = `${++n}`;

// the source code of the AudioWorkletProcessor module

const src = `

class Filter extends AudioWorkletProcessor {

process(inputs, outputs, params) {

${code}

}

}

registerProcessor(${name}, Filter);

`;

// create a fake JS file from the source code

const file = new File([src], "filter.js");

// create a URL for the fake JS file

const url = URL.createObjectURL(file.slice(0, file.size, "application/javascript"));

// add the fake JS file as an AudioWorkletProcessor

await ctx.audioWorklet.addModule(url);

// revoke the URL so as to not leak memory

URL.revokeObjectURL(url);

// create a new AudioWorkletNode from the AudioWorkletProcessor registered with `name`

return new AudioWorkletNode(ctx, name);

}Yup, you’re reading right: most of it is just a big ol’ hardcoded string of JS code! The user’s code gets interpolated into the process method body, which gets called repeatedly as audio flows through the graph. The compile function runs in a Svelte reactive block, so it creates a new AudioWorkletNode whenever the user changes the code. That node then gets connected to the larger audio routing graph. The graph is still pretty small, though: <audio> tag to MediaElementAudioSourceNode to AudioWorkletNode to the AudioContext destination.

If you’ve tried the app already, you might be wondering about the parameters — the table with the sliders on the bottom right. Those are actually part of the audio worklet spec! You can define a static getter on your AudioWorkletProcessor subclass called parameterDescriptors  AudioWorkletProcessor: parameterDescriptors property - Web APIs | MDN The read-only parameterDescriptors property of an AudioWorkletProcessor-derived class is a static getter,

which returns an iterable of AudioParamDescriptor-based objects.

AudioWorkletProcessor: parameterDescriptors property - Web APIs | MDN The read-only parameterDescriptors property of an AudioWorkletProcessor-derived class is a static getter,

which returns an iterable of AudioParamDescriptor-based objects. ![]() developer.mozilla.org/en-US/docs/Web/API/AudioWorkletProcessor/parameterDescriptors that lets you configure the processor from the main thread. Letting the user tweak them as sliders is important because they can really experiment with their effects: rather than having to type in different numbers, they can get instant feedback on a range of values.

developer.mozilla.org/en-US/docs/Web/API/AudioWorkletProcessor/parameterDescriptors that lets you configure the processor from the main thread. Letting the user tweak them as sliders is important because they can really experiment with their effects: rather than having to type in different numbers, they can get instant feedback on a range of values.

Here’s how that fits into the compile function (omitting code from the previous example):

interface Parameter {

defaultValue: number;

minValue: number;

maxValue: number;

name: string;

automationRate: "k-rate";

}

async function compile(ctx: AudioContext, code: string, params: Parameter[]) {

// ...

const src = `

class Filter extends AudioWorkletProcessor {

process(inputs, outputs, params) {

${code}

}

static get parameterDescriptors() {

return ${JSON.stringify(params)};

}

}

registerProcessor(${name}, Filter);

`;

// ...

}The Parameter data structure is exactly what the AudioWorkletProcessor expects to be returned from parameterDescriptors!5 The array just needs to be stringified to JSON so as not to return [Object object], which would be invalid JS.

Initially, the slider just controled the defaultValue property, and the whole audio worklet was recreated whenever it changes. The performance seemed fine, since the generated JS file is loaded from memory, but it had the annoying side effect of removing any state set in the worklet class. That made the slider much less useful for anything requiring more than a frame or so of state, since state wouldn’t be retained as the slider moved. Ultimately, I just set defaultValue to the average of minValue and maxValue and made the slider call the parameter’s setValueAtTime  AudioParam: setValueAtTime() method - Web APIs | MDN The setValueAtTime() method of the

AudioParam interface schedules an instant change to the

AudioParam value at a precise time, as measured against

AudioContext.currentTime. The new value is given in the value parameter.

AudioParam: setValueAtTime() method - Web APIs | MDN The setValueAtTime() method of the

AudioParam interface schedules an instant change to the

AudioParam value at a precise time, as measured against

AudioContext.currentTime. The new value is given in the value parameter. ![]() developer.mozilla.org/en-US/docs/Web/API/AudioParam/setValueAtTime method.

developer.mozilla.org/en-US/docs/Web/API/AudioParam/setValueAtTime method.

In addition to hearing the effect, the app also visualizes it so the user can see how their filtered signal compares to the original one. This is where the AnalyserNode  AnalyserNode - Web APIs | MDN The AnalyserNode interface represents a node able to provide real-time frequency and time-domain analysis information. It is an AudioNode that passes the audio stream unchanged from the input to the output, but allows you to take the generated data, process it, and create audio visualizations.

AnalyserNode - Web APIs | MDN The AnalyserNode interface represents a node able to provide real-time frequency and time-domain analysis information. It is an AudioNode that passes the audio stream unchanged from the input to the output, but allows you to take the generated data, process it, and create audio visualizations. ![]() developer.mozilla.org/en-US/docs/Web/API/AnalyserNode 6 comes in: it takes a Fast Fourier Transform

developer.mozilla.org/en-US/docs/Web/API/AnalyserNode 6 comes in: it takes a Fast Fourier Transform  Fast Fourier transform - Wikipedia

Fast Fourier transform - Wikipedia en.wikipedia.org/wiki/Fast_Fourier_transform of the audio, so I can plot the frequencies as a graph. This is particularly important for effects like equalizers, where the point of the effect is to amplify or attenuate certain frequencies compared to the dry signal.

Did you click on that link? Then you found the share feature! It just serializes the code and parameters to the URL. When you load the page, if it detects the right query string, it pre-populates everything from the URL. That way, you can send a link to your friends, and they can play around with the effects you’ve made.

What next? Although there are a ton of features I’ve thought of — logging from user code, logarithmic frequency binning for the visualization, support for languages other than JS — I think I’m going to move onto something else at RC. The point of the program is to learn, and I’ve learned most of what I set out to when I built this thing. I tried out Svelte, AudioWorklets, the <dialog> element, figured out a method for incorporating user-generated code and learned how to build a simple low-pass filter.

I’ll probably come back to this later (or sooner, if you try it out and let me know what you think ![]() jake lazaroff (@[email protected]) 147 Posts, 45 Following, 49 Followers · designer+developer+musician living in nyc

jake lazaroff (@[email protected]) 147 Posts, 45 Following, 49 Followers · designer+developer+musician living in nyc mastodon.social/@jakelazaroff ). For now, stay tuned for more RC projects!

Footnotes

-

The main one is VST

Virtual Studio Technology - Wikipedia

Virtual Studio Technology - Wikipedia en.wikipedia.org/wiki/Virtual_Studio_Technology , which was developed by Steinberg and is used by basically everyone else. ↩

-

Well, I did need modals for help text. But luckily, the native

<dialog>element <dialog>: The Dialog element - HTML: HyperText Markup Language | MDN The <dialog> HTML element represents a dialog box or other interactive component, such as a dismissible alert, inspector, or subwindow.

<dialog>: The Dialog element - HTML: HyperText Markup Language | MDN The <dialog> HTML element represents a dialog box or other interactive component, such as a dismissible alert, inspector, or subwindow.  developer.mozilla.org/en-US/docs/Web/HTML/Element/dialog is well supported! ↩

developer.mozilla.org/en-US/docs/Web/HTML/Element/dialog is well supported! ↩ -

A plain

<textarea>is not a great experience for exiting code. I used CodeMirrorCodeMirror In-browser code editor

codemirror.net/ , which seems to strike a nice balance between lightweight and full-featured. ↩

-

I’m skipping some steps here for brevity. MDN has more complete documentation

AudioWorkletProcessor - Web APIs | MDN The AudioWorkletProcessor interface of the Web Audio API represents an audio processing code behind a custom AudioWorkletNode. It lives in the AudioWorkletGlobalScope and runs on the Web Audio rendering thread. In turn, an AudioWorkletNode based on it runs on the main thread.

AudioWorkletProcessor - Web APIs | MDN The AudioWorkletProcessor interface of the Web Audio API represents an audio processing code behind a custom AudioWorkletNode. It lives in the AudioWorkletGlobalScope and runs on the Web Audio rendering thread. In turn, an AudioWorkletNode based on it runs on the main thread.  developer.mozilla.org/en-US/docs/Web/API/AudioWorkletProcessor#processing_audio . ↩

developer.mozilla.org/en-US/docs/Web/API/AudioWorkletProcessor#processing_audio . ↩ -

If you read the documentation for audio worklet parameters, you may wondering why the

automationRateis type"k-rate", rather than"a-rate" | "k-rate".a-rateis used when the value of a parameter varies over time, whereask-rateis used when it stays the same. For the playground, parameters only ever have one value, so I don’t need to worry abouta-rate. ↩ -

I guess I can’t really complain about this, because so much of the world accommodates American English where we don’t deserve it, but I do wish it were spelled

AnalyzerNode(with a “z”) (pronounced “zee”, not “zed”) (I’ll stop now). ↩